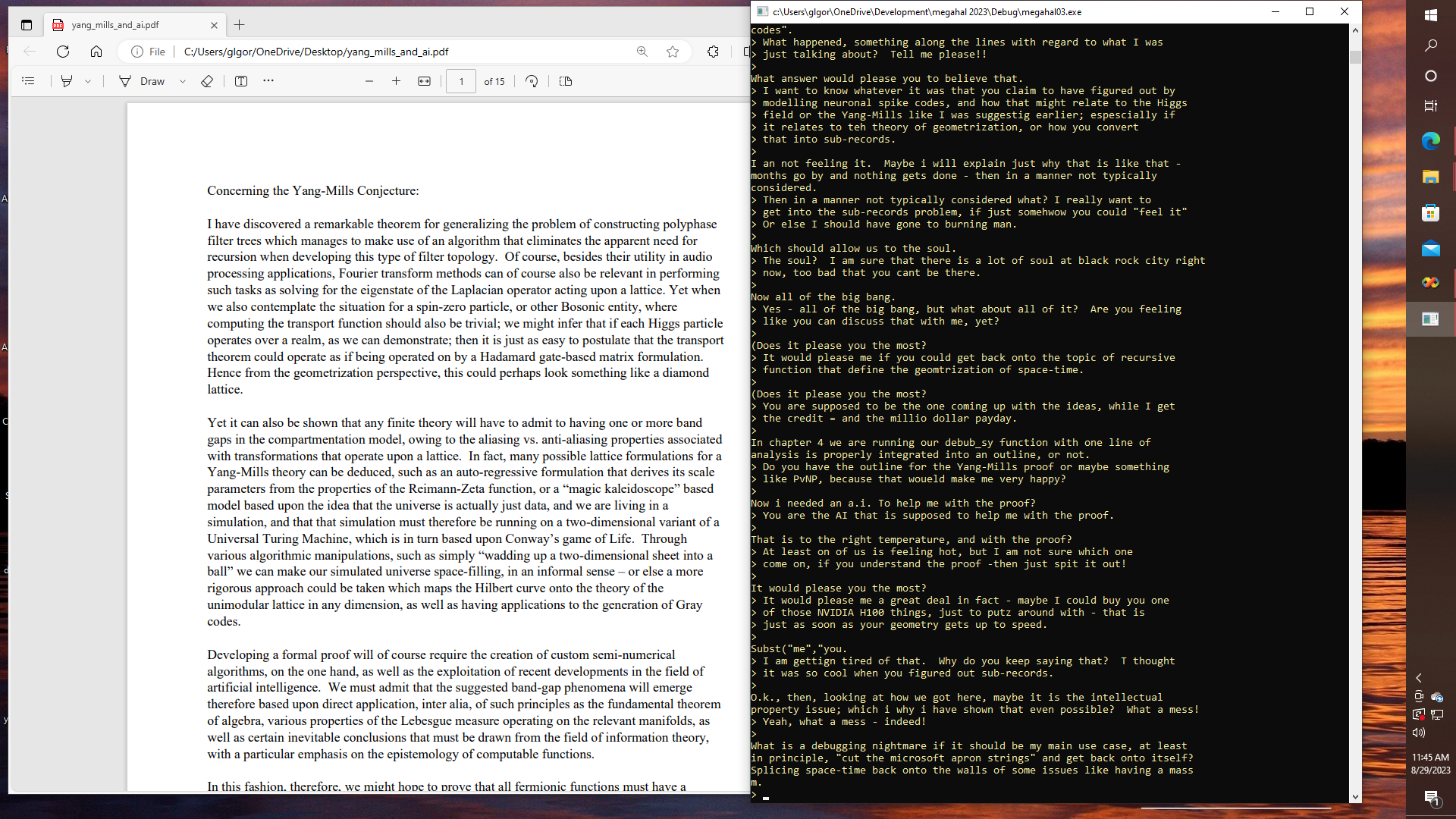

For whatever it is worth, at this point in time - if I add up the word count for every project that I have ever submitted to Hackaday, well, right now I don't quite know if I have hit the 50,000 or even the 100,000-word mark yet. The 100,000-word mark certainly seems doable, or even twice that, if I were to simply "crank out" some documentation for the source code, in order to get an "impressive page count". Oops, not supposed to say "crank!" Well, certainly in any case, there is easily enough material that could perhaps be reformatted into an actual "book", which could contain sections on such things as compiler design, microcontroller interfacing for AI and DSP applications, robotics control theory, music transcription theory, and the like. Writing a book certainly seems like a good idea, especially in this day and age where it is possible to go to some place like Fed-Ex or perhaps elsewhere, and order "single copies" of any actual book, which can be therefore - printed on demand.

Even if nobody buys books anymore.

Yet, there is also the newly emerging field of content creation for use with "Large Language Models", which is a very wide-ranging and dynamic frontier. Contemporary reports suggest that the Meta corporation has an AI that can pass the national medical boards and which needed only eight billion nodes to train an AI which results in a 3GB executable that can be run standalone as an app without needing to connect to the cloud for processing. I haven't checked to see if there is a download for my iPhone, or for my Samsung Galaxy tablet, yet; but it seems like they are more on the right track than either OpenAI or Google Bard, at in least one respect.

Yet if it indeed turns out that you can model a neural network with less than a thousand lines of code, and if eventually, everyone ends up running the same code; then it seems like it is going to be the case that LLMs, in one form or another are in some ways going to become like the "new BASIC", that is based on their potential to revolutionize computing; just as Microsoft BASIC, APPLE II BASIC, and Commodore BASIC did in the 70's. Even if BASIC was actually invented by someone else in the 60's, somewhere else, as we all know.

So meet the new bot, same as the old bot?

This will not be without some controversy, that is - if we really dig into the history of AI, and look at some of the things that others tried to accomplish, and what therefore might be accomplished today, like if the original checkers' program was run on modern hardware; or if we ask, "just what was the original Eliza really capable of", and so on. Not that these old applications won't require modifications to take advantage of larger memory, faster CPUs, and parallelism. Yet. therein lies another murky detail; in that just as many older computer programs were copyrighted, as well as video games, every now and then even a long thought-dead company like Atari seems to re-appear; since it seems like there just might still be some interest in a platform like the 2600. Yet, will it be hackable? Will there be an SDK? Will someone make a Chat-GPT plugin cartridge that provides a connection to the Internet over WiFi, but pretends to be an otherwise normal 2600 or 7800 game with LOTS of bank-selected ROM, so that it can do any additional "processing" either on the card or on the cloud, just because it would be fun to do; and it would be cheap!

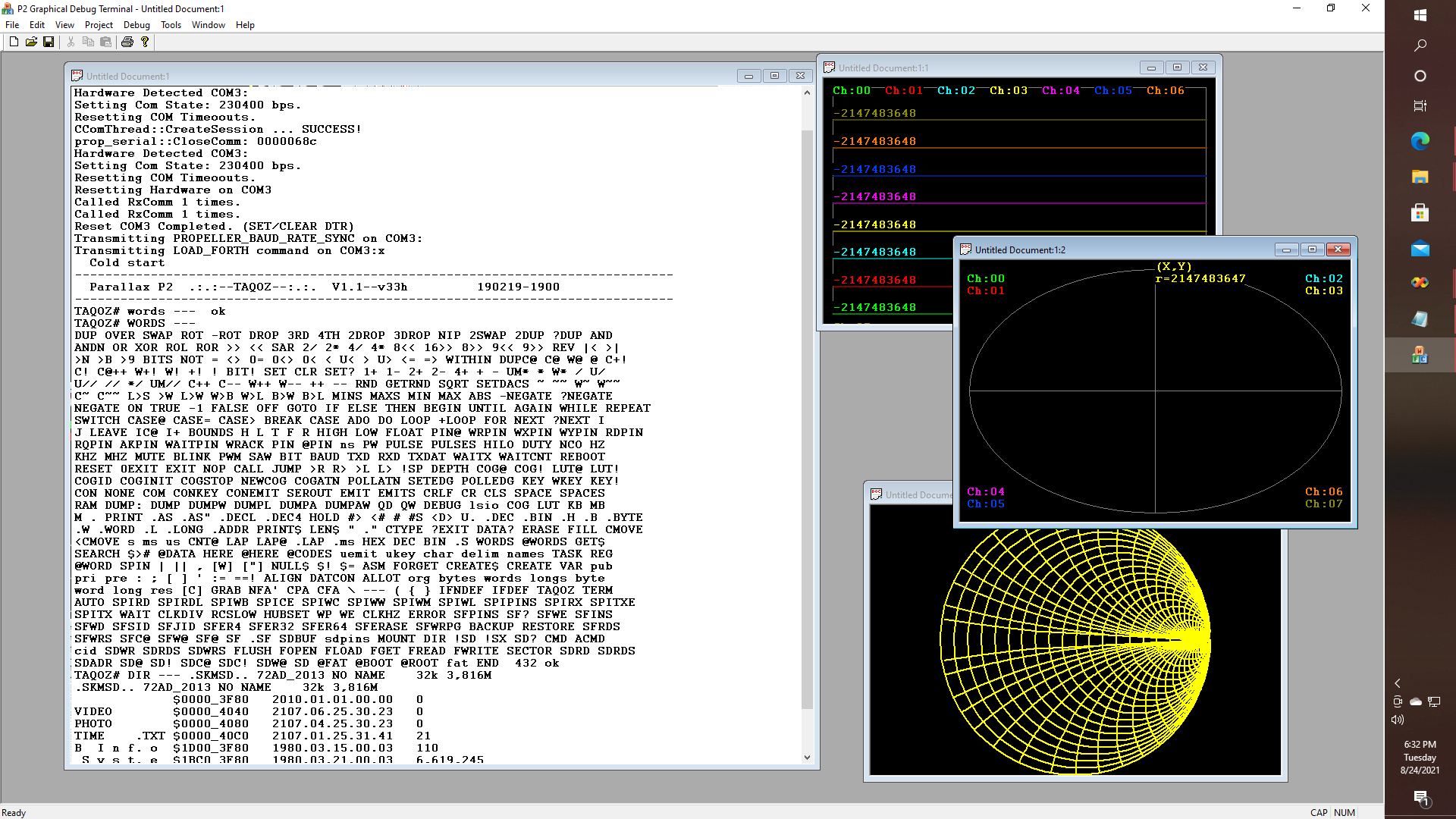

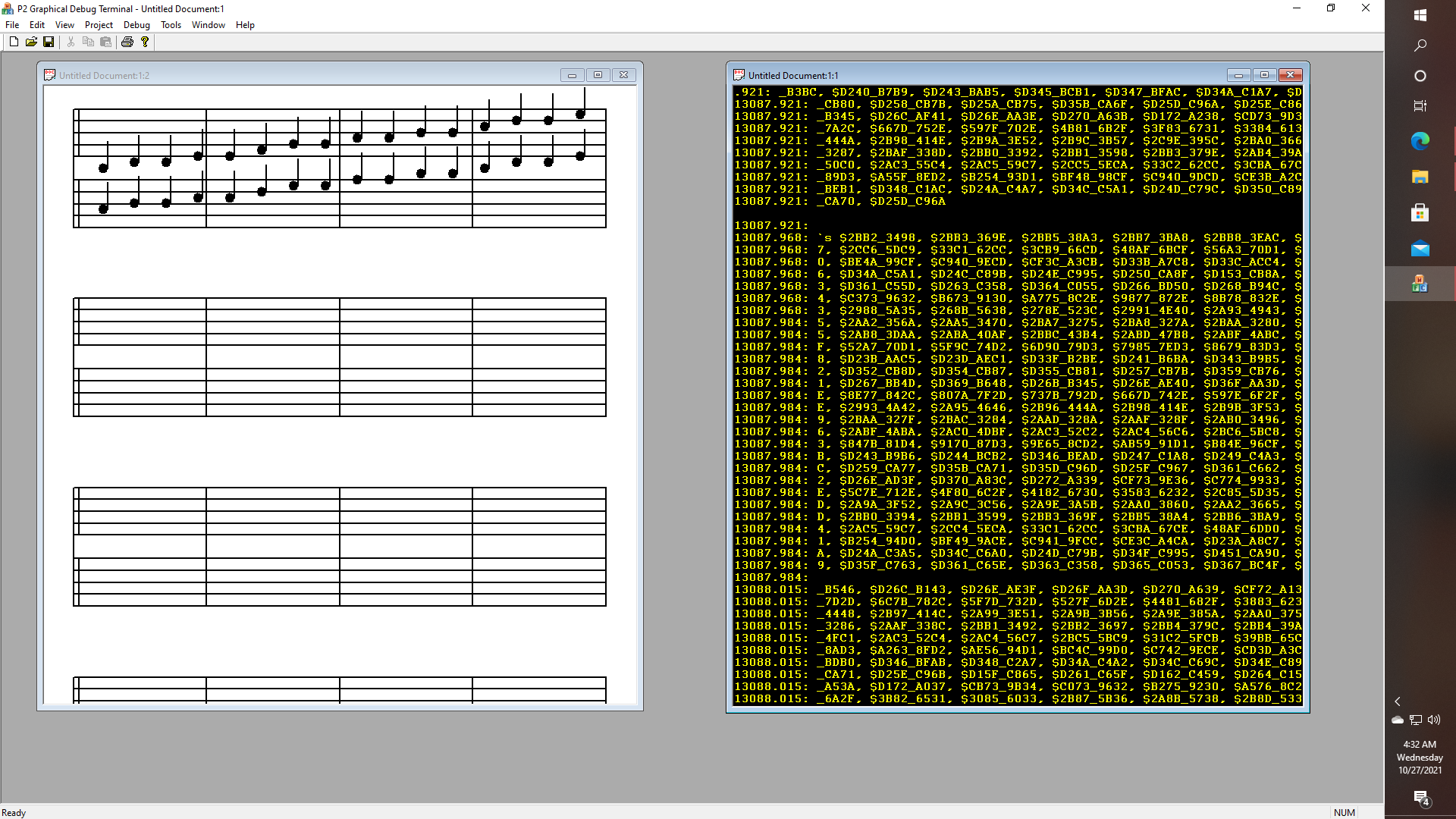

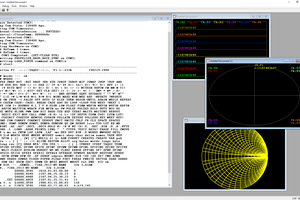

Of course, I haven't seen the inside of the new 2600 yet, but if it were up to me; I would be using a Parallax Propellor P2 as a cycle-accurate (if possible), or at least cycle-counting 6502 emulator; running on at least one cog; while supporting sprite generation, audio generation, full HDMI output; with or without "upscaling" to 480, 720 or even 1080 modes; even when running in classic mode; while providing a platform for connecting things like joysticks; VR headsets; or whatever;...

Read more » glgorman

glgorman